I've started using Codex as my personal video editor.

My first experiment was animating some effects end-to-end. This time I wanted to try something fancier: the classic "text behind me" effect, without green screen, without opening Premiere.

Here's the final result:

Everything in this video was done 100% through Codex. No timeline editor. Just chatting back and forth in the terminal and iterating on a Remotion project.

Here's how I did it.

Disclaimers

Before anyone points things out:

- This took longer than manual editing for me.

- Mainly because I'm still building the workflow and the primitive tools that a traditional editor gives you for free. Masking and matting is a good example. I'm basically rebuilding those pieces (with Codex) and then using them.

- Again, it's not real-time. I had a rough storyboard in my head when I started shooting. I shot the video first, then went to the terminal to "talk" to Codex and edit/animate offline.

- But the overlays/effects and everything you see in the final video were produced via Codex-driven code iteration. I mostly just drove by feedback and taste.

The toolchain

To achieve the effect, after some brainstorming with Codex, here's what we came up with.

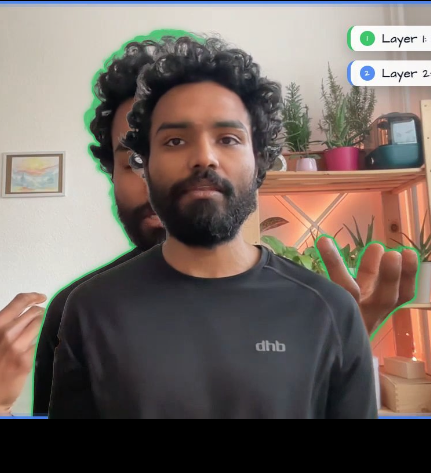

SAM3

- Input: a prompt ("person") and the source video

- Output: a static segmentation mask (typically just one frame, because you need that mask to drive the next step)

MatAnyone

- Input: the source video + the static mask from SAM3

- Output: a tracked foreground matte across the full video (this is what makes occlusion possible)

Remotion

- Input: background video + foreground alpha + text overlays

- Output: the final composed video

Luckily, all three tools are open source. You can try them yourself:

I asked Codex to build client tools for SAM3 and MatAnyone. My Mac only has 8 cores, so I have them deployed on Modal. Codex built the client that calls those endpoints.

How I actually work on these

People ask me how long this takes and how I approach it.

I usually start with a rough storyboard in mind. I already know how it should look, at least vaguely and abstractly. Then I go to Codex and start iterating.

In this case it took about 8-9 hours. Mainly because getting MatAnyone to work reliably was hard. There were instances where the output was completely wrong:

Once the client tools were working, the actual Codex iteration was easier.

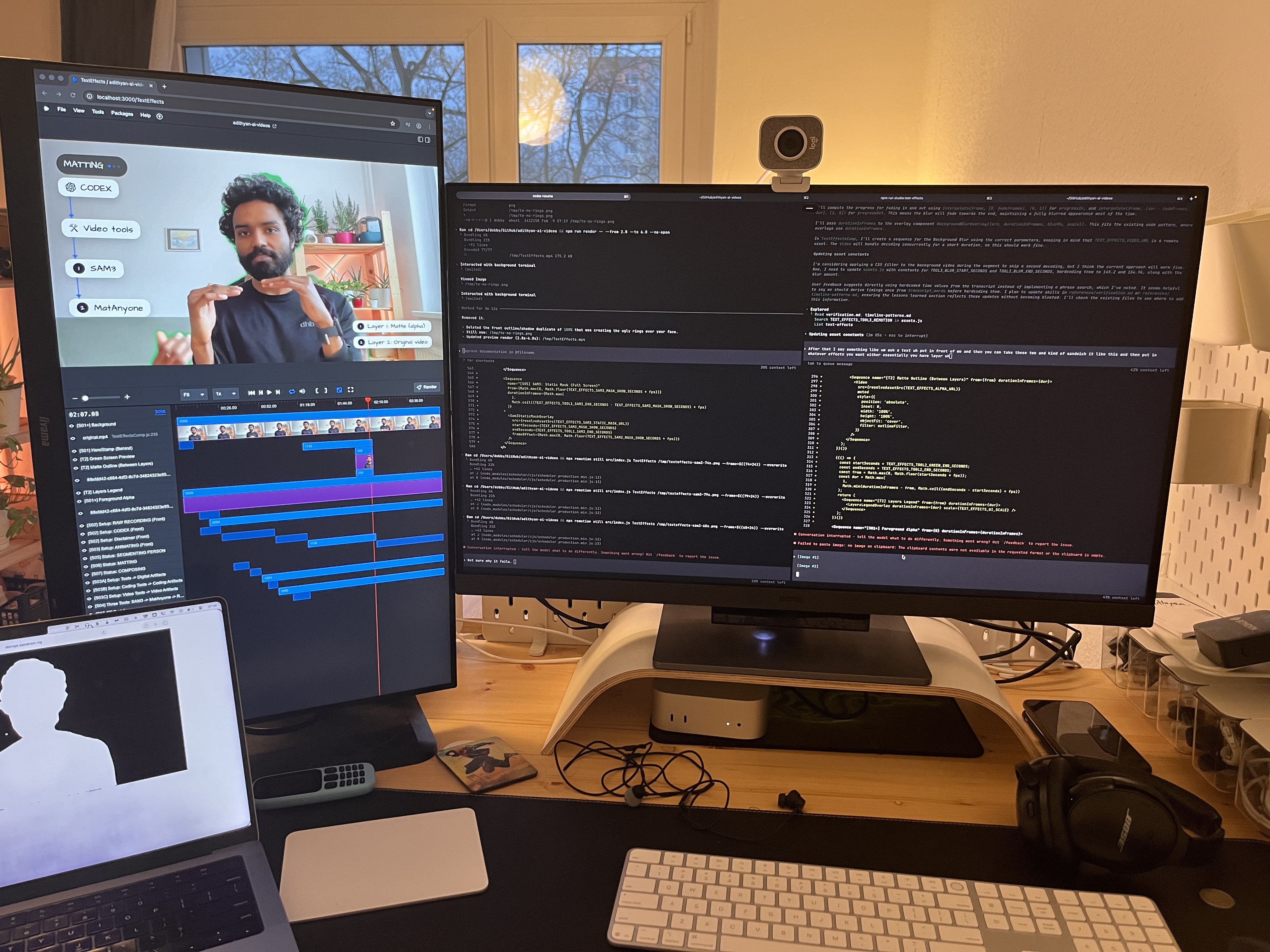

Here's what my screen typically looks like when I'm working on these. Remotion preview on the left, terminal on the right:

I keep a rough storyboard in the GitHub repo. Here's an example storyboard.json. Then I work with multiple Codex instances in parallel for different parts of the storyboard.

People also ask how I get the animations timed correctly to the words. I explained this in more detail in my last post, but basically: we generate a transcript JSON with word-level timestamp information. Here's an example transcript.json. Then I just tell Codex "at this word, do this" and it uses those timestamps to sync everything.

Also, one tip I picked up from an OpenAI engineer: close the loop with the agent. Have it review its own output, looking at the images and iterating on itself. I used this in this video and it's helpful. I haven't quite nailed it yet since I'm still learning how best to do this, but in many cases Codex was able to self-review. I saved a lot of time by writing a script where it renders only certain frames in Remotion and reviews them.

Code

Here are the artifacts that Codex and I generated. It's a Remotion project:

I pushed it to open source because people asked after the last post. Fair warning though: this is just a dump of what I have, not a polished "clone and run" setup. You can use it for inspiration, but it won't work directly out of the box. I'll clean it up to be more plug-and-play soon.

Closing

This took longer than doing it manually.

We're building an editor from first principles. A traditional editor comes with a lot of tools built in. We don't have those yet. Building them is taking time.

But unlike a traditional editor, the harness driving all these tools is super intelligent. Once Codex has the same toolkit, it'll be way more capable than any traditional editor could be. That's the journey.

I'm going to be spending more time building these primitives.

More soon.